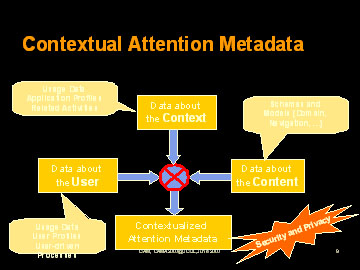

In order to facilitate access to learning resources on a personal and individual level, today's systems rely on simple and often stereotypical descriptions of and about the user that can be captured within the system. Subsequently, adaption and personalisation processes are limited in their support because they only have limited observations about the users and their activities. A well known example are the recommendations generated by Amazon. The system is not able to detect for which purpose the users are searching/buying something so provides them with recommendations based on their complete search and buy history. Subsequently, some recommendations are unsuited for the actual context. Furthermore, users are described in such monolithic systems without the ability to exchange and/or extend their respective descriptions with descriptions from other systems. The models that capture contextual information are limited in terms of metadata about the contexts, the users and the contents. They only allow for the correlation of captured information in their specific context. We suggest using contextualised attention metadata (CAM) to capture the user's attention in application scenarios like learning and business process optimisation.

Tracking the user's attention with contextualised attention metadata may give insights into which tasks users are engaged with during a live virtual meeting. CAM indicates the users' context, preferences and browsing path. Following the CAM approach in FM may improve multi-tasking in virtual meetings and serve as a foundation for recommender systems matching users' behaviour.

For more For more information, please contact:

Dr Martin Wolpers

Fraunhofer FIT.ICON

Schloss Birlinghoven

53754 Sankt Augustin, Germany